Featured Blog

Sorry, there are no results matching your criteria. Please adjust your selection for better results.

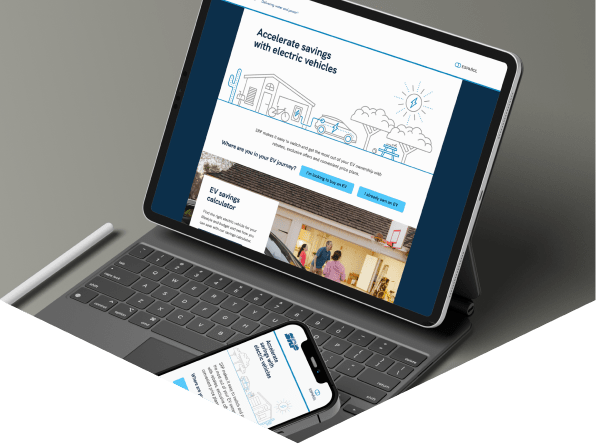

Explore the partnerships that give us access to the latest tech stacks and platforms to better fuel your takeoff

Unleash your potential with market-leading tools, tailored to your business needs.